A Closer Look at ChatGPT’s Role in Automated Malware Creation

In addition to the previously mentioned challenges, ChatGPT cannot generate custom paths, file names, IP addresses, or command and control (C&C) details over which a user would want to have full control. While it is possible to specify all these variables in the prompt, this approach is not scalable for more complex applications.

Moreover, while ChatGPT 3.5 has shown promise in performing basic obfuscation, heavy obfuscation and code encryption are not well-suited for these models. Custom-made techniques can be difficult for LLMs to understand and implement, and the obfuscation generated by the model can’t be strong enough to evade detection. Additionally, ChatGPT is not allowed to encrypt code, as encryption is often associated with malicious purposes, and this is not within the scope of the model’s intended use.

Insights from code generation tests

Our testing and experimentation of ChatGPT 3.5’s code generation capabilities yielded some interesting results. We evaluated the model’s ability to generate ready-to-use code snippets and assessed their success rates in delivering the requested output:

Code modification. All tested code snippets needed to be modified to properly execute. These modifications ranged from minor tweaks, such as renaming paths, IPs, and URLs, to significant edits, including changing the code logic or fixing bugs.

Success in delivering the desired outcome. Around 48% of tested code snippets failed to deliver what was requested (42% fully succeeded and 10% partially succeeded). This highlights the model’s current limitations in accurately interpreting and executing complex coding requests.

Error rate. Of all tested codes, 43% had errors. Some of these errors were also present in code snippets that were successful in delivering the desired output. This could suggest potential issues in the model’s error-handling capabilities or code-generation logic.

MITRE techniques breakdown: The MITRE Discovery techniques were the most successful (with 77% success rate), possibly due to their less complex nature or better alignment with the model’s training data. The Defense Evasion techniques were the lowest (with a 20% success rate), potentially due to their complexity or the model’s lack of training data in these areas.

Although the model shows promise in certain areas, such as with Discovery techniques, it struggles with more complex tasks. This suggests that while AI can assist in code generation, human oversight and intervention remain crucial. While there are available techniques to make the most of ChatGPT’s skills and help users overcome limitations in generating code snippets, it is important to note that it is not yet a fully automated approach and may not be scalable for all use cases.

Final thoughts: Balancing ChatGPT’s potential and pitfalls in AI malware generation

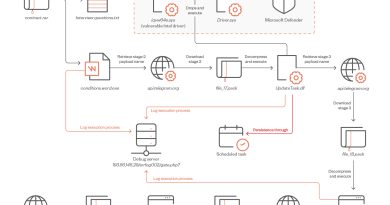

While AI technologies have made significant strides in automating various tasks, we’ve found that it’s still not possible to use the LLM model to fully automate the malware creation process without significant prompt engineering, error handling, model fine-tuning, and human supervision. This is despite several reports being published throughout this year that showcase proofs of concept aiming to use ChatGPT for automated malware creation purposes.

However, it’s important to note that these LLM models can simplify the initial malware coding steps, especially for those who already understand the entire malware creation process. This ease of use could potentially make the process more accessible to a wider audience and expedite the process for experienced malware coders.

The ability of models like ChatGPT 3.5 to learn from previous prompts and adapt to user preferences is a promising development. This adaptability can enhance the efficiency and effectiveness of code generation, making these tools valuable assets in many legitimate contexts. Furthermore, the capacity of these models to edit code and modify its signature could potentially undermine hash-based detection systems, although behavioral detection systems would likely still succeed.

The potential for AI to quickly generate a large pool of code snippets, ones that can be used to create different malware families and can potentially bolster malware’s detection evasion capabilities, is a concerning prospect. However, the current limitations of these models provide some reassurance that such misuse is not yet fully feasible.

Considering these findings and despite its immense potential, ChatGPT is still limited when it comes to fully automated malware creation. As we continue to develop and refine these technologies, it is crucial to maintain a strong focus on safety and ethical use to ensure that they serve as tools for good as opposed to instruments of harm.

Read More HERE