The science behind Microsoft Threat Protection: Attack modeling for finding and stopping evasive ransomware

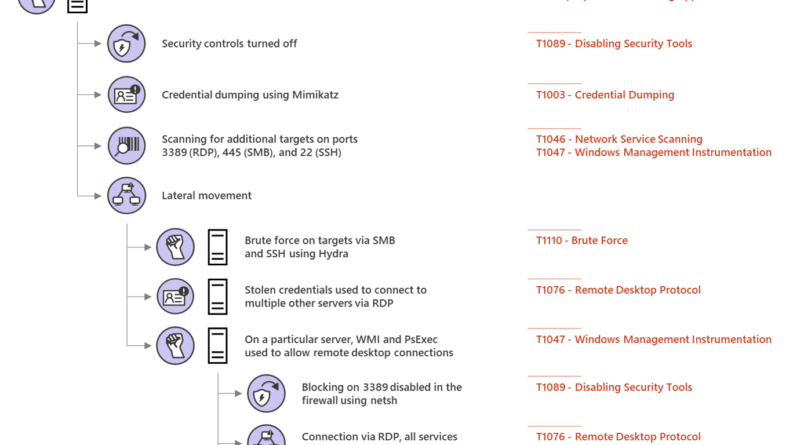

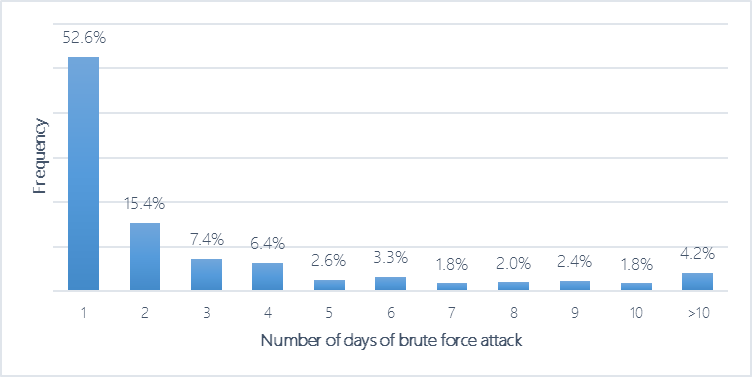

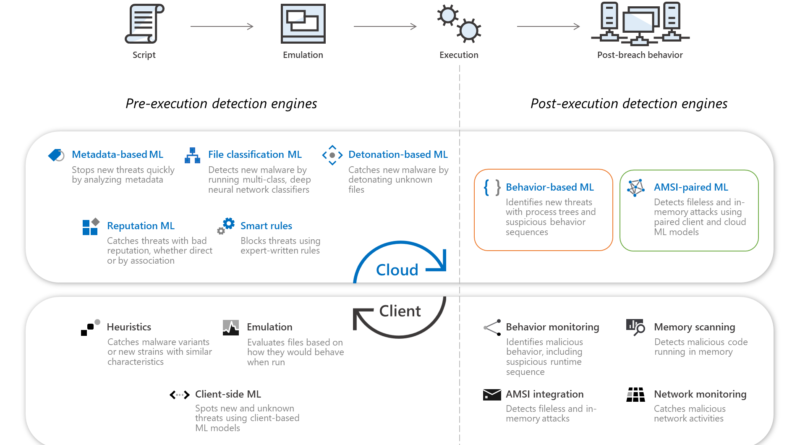

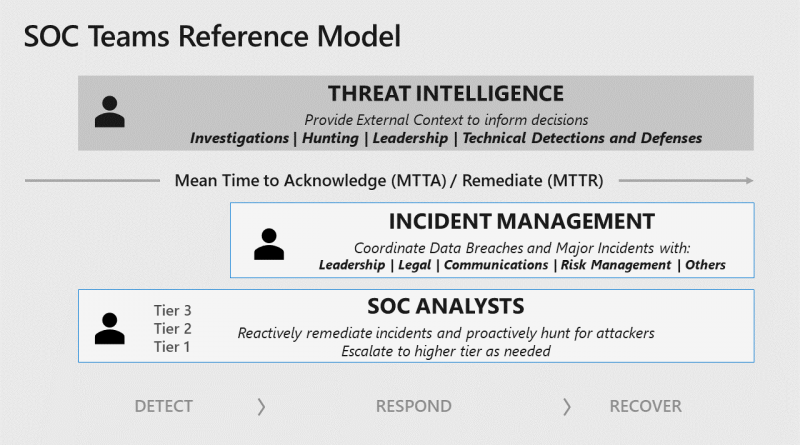

Microsoft Threat Protection uses a data-driven approach for identifying lateral movement, combining industry-leading optics, expertise, and data science to deliver automated discovery of some of the most critical threats today.

The post The science behind Microsoft Threat Protection: Attack modeling for finding and stopping evasive ransomware appeared first on Microsoft Security. READ MORE HERE…