Introducing Kernel Data Protection, a new platform security technology for preventing data corruption

Attackers, confronted by security technologies that prevent memory corruption, like Code Integrity (CI) and Control Flow Guard (CFG), are expectedly shifting their techniques towards data corruption. Attackers use data corruption techniques to target system security policy, escalate privileges, tamper with security attestation, modify “initialize once” data structures, among others.

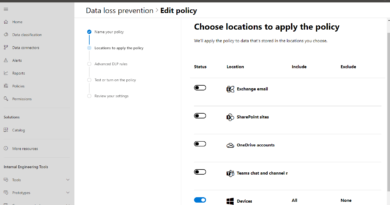

Kernel Data Protection (KDP) is a new technology that prevents data corruption attacks by protecting parts of the Windows kernel and drivers through virtualization-based security (VBS). KDP is a set of APIs that provide the ability to mark some kernel memory as read-only, preventing attackers from ever modifying protected memory. For example, we’ve seen attackers use signed but vulnerable drivers to attack policy data structures and install a malicious, unsigned driver. KDP mitigates such attacks by ensuring that policy data structures cannot be tampered with.

The concept of protecting kernel memory as read-only has valuable applications for the Windows kernel, inbox components, security products, and even third-party drivers like anti-cheat and digital rights management (DRM) software. On top of the important security and tamper protection applications of this technology, other benefits include:

- Performance improvements – KDP lessens the burden on attestation components, which would no longer need to periodically verify data variables that have been write-protected

- Reliability improvements – KDP makes it easier to diagnose memory corruption bugs that don’t necessarily represent security vulnerabilities

- Providing an incentive for driver developers and vendors to improve compatibility with virtualization-based security, improving adoption of these technologies in the ecosystem

KDP uses technologies that are supported by default on Secured-core PCs, which implement a specific set of device requirements that apply the security best practices of isolation and minimal trust to the technologies that underpin the Windows operating system. KDP enhances the security provided by the features that make up Secured-core PCs by adding another layer of protection for sensitive system configuration data.

In this blog we’ll share technical details about how Kernel Data Protection works and how it’s implemented on Windows 10, with the goal of inspiring and empowering driver developers and vendors to take full advantage of this technology designed to tackle data corruption attacks.

Kernel Data Protection: An overview

In VBS environments, the normal NT kernel runs in a virtualized environment called VTL0, while the secure kernel runs in a more secure and isolated environment called VTL1. More details on VBS and the secure kernel are available on Channel 9 here and here. KDP is intended to protect drivers and software running in the Windows kernel (i.e., the OS code itself) against data-driven attacks. It is implemented in two parts:

- Static KDP enables software running in kernel mode to statically protect a section of its own image from being tampered with from any other entity in VTL0.

- Dynamic KDP helps kernel-mode software to allocate and release read-only memory from a “secure pool”. The memory returned from the pool can be initialized only once.

The memory managed by KDP is always verified by the secure kernel (VTL1) and protected using second level address translation (SLAT) tables by the hypervisor. As a result, no software running in the NT kernel (VTL0) will ever be able to modify the content of the protected memory.

Both dynamic and static KDP, which are already available in the latest Windows 10 Insider Build and work with any kind of memory, except for executable pages. Protection for executable pages is already provided by hypervisor-protected code integrity (HVCI), which prevents any non-signed memory from being ever executable, granting the W^X (a page that is either writable or executable, but never both) condition. HVCI and the W^X conditions are not explained in this article (refer to the new upcoming Windows Internals book for further details).

Static KDP

A driver that wants a section of its image protected through static KDP should call the MmProtectDriverSection API, which has the following prototype:

NTSTATUS MmProtectDriverSection (PVOID AddressWithinSection, SIZE_T Size, ULONG Flags)

A driver specifies an address located inside a data section and, optionally, the size of the protected area and some flags. As of this writing, the “size” parameter is reserved for future use: the entire data section where the address resides will always be protected by the API.

In case the function succeeds, the memory backing the static section becomes read-only for VTL0 and protected through the SLAT. Unloading a driver that has a protected section is not allowed; attempting to do so will result in, by design, a blue screen error. However, we know that sometimes a driver should be able to be unloaded. Therefore, we have introduced the MM_PROTECT_DRIVER_SECTION_ALLOW _UNLOAD flag (1). If the caller specifies it, the system will be able to unload the target driver, which means that in this case, the protected section will be first unprotected and then released by NtUnloadDriver.

Dynamic KDP

Dynamic KDP allows a driver to allocate and initialize read-only memory using services provided by a secure pool, which is managed by the secure kernel. The consumer first creates a secure pool context associated with a tag. All of the consumer’s future memory allocations will be associated with the created secure pool context. After the context is created, read-only allocations can be performed through a new extended parameter to the ExAllocatePool3 API:

PVOID ExAllocatePool3 (POOL_FLAGS Flags, SIZE_T NumberOfBytes, ULONG Tag, PCPOOL_EXTENDED_PARAMETER ExtendedParameters, ULONG Count);

The caller can then specify the size of the allocation and the initial buffer from where to copy the memory in a POOL_EXTENDED_PARAMS_SECURE_POOL data structure. The returned memory region can’t be modified by any entity running in VTL0. In addition, at allocation time, the caller supplies a tag and a cookie value, which are encoded and embedded into the allocation. The consumer can, at any time, validate that an address is within the memory range reserved for dynamic KDP allocations and that the expected cookie and tag are in fact encoded into a given allocation. This allows the caller to check that their pointer to a secure pool allocation has not been switched with a different allocation.

Similar to static KDP, by default the memory region can’t be freed or modified. The caller can specify at allocation time that the allocation is freeable or modifiable using the SECURE_POOL_FLAGS_FREEABLE (1) and SECURE_POOL_FLAG_MODIFIABLE(2) flags. Using these flags reduces the security of allocation but allows dynamic KDP memory to be used in scenarios where leaking all allocations would be infeasible, such as allocations which are made per process on the machine.

Implementing KDP on Windows 10

As mentioned, both static KDP and dynamic KDP rely on the physical memory being protected by the SLAT in the hypervisor. When a processor supports the SLAT, it uses another layer for memory address translation. (Note: AMD implements the SLAT through “nested page tables”, while Intel uses the term “extended page tables”.)

The second-level address translation (SLAT)

When the hypervisor enables SLAT support, and a VM is executing in VMX non-root mode, the processor translates an initial virtual address called Guest Virtual Address (GVA, or stage 1 virtual address in ARM64) in an intermediate physical address called Guest Physical Address (GPA, or IPA in ARM64). This translation is still managed by the page tables, addressed by the CR3 control register managed by the Guest OS. The final result of the translation yields back to the processor a GPA, with the access protection specified in the guest page tables. Note that only software operating in kernel mode can interact with page tables. A rootkit usually operates in kernel mode and can indeed modify the protection of the intermediate physical pages.

The hypervisor helps the processor to translate the GPA using the extended (or nested) page tables. On non-SLAT systems, when a virtual address is not present in the TLB, the processor needs to consult all the page tables in the hierarchy to reconstruct the final physical address. As shown in Figure 1, the virtual address is split into four parts (on LA48 systems). Each part represents the index into a page table of the hierarchy. The physical address of the initial PML4 table is specified by the CR3 register. This explains why the processor is always able to translate the address and get the next physical address of the next table in the hierarchy. It’s important to note that in each page table entry of the hierarchy, the NT kernel specifies a page protection through a set of attributes. The final physical address is accessible only if the sum of the protections specified in each page table entry allows it.

Figure 1. The X64 Stage 1 address translation (Virtual address to guest physical address)

When the SLAT is on, the intermediate physical address specified in the guest’s CR3 register needs to be translated to a real system physical address (SPA). The mechanism is similar: the hypervisor configures the nCR3 field of the active virtual machine control block (VMCB) representing the currently executing VM to the physical address of the nested (or extended) page tables (note that the field is called “EPT pointer” in the Intel architecture). The nested page tables are built in a similar way to standard page tables, so the processor needs to scan the entire hierarchy to find the correct physical address, as illustrated in figure 2. In the figure, “n” indicates nested page tables in the hierarchy, which is managed by the hypervisor, while “g” indicates guest page tables, which is managed by the NT Kernel.

Figure 2. The X64 Stage 2 physical address translation (GPA to SPA)

As shown, the final translation of a guest virtual address to a system physical address goes through two translation types: GVA to GPA, configured by the guest VM’s kernel, and GPA to SPA, configured by the hypervisor. Note that in the worst case, the translation involves all four page hierarchy levels, which results in 20 table lookups. The mechanism could be slow and is mitigated by processor support for an enhanced TLB. In the TLB entries, another ID that identifies the currently executing VM is included (called virtual processor identifier or VPID in Intel systems, address space ID or ASID in AMD systems), so the processor can cache the translation result of a virtual address belonging to two different VMs without any collision.

Figure 3. Nested entry of an NPT page table in the hierarchy

As highlighted in Figure 3, an NPT entry specifies multiple access protection attributes. This allows the hypervisor to further protect the system physical address (the NPT cannot be accessed by any other entity except for the hypervisor itself). When the processor attempts to read, write, or run an address to which the NPTs disallow access, an NPT violation (EPT violation in Intel architecture) is raised, and a VM exit is generated. A VM exit generated by NTP violation does not happen frequently. In general, it is produced in nested configurations or when Software MBEC is in use for HVCI. If the NPT violation happens for other reasons, the Microsoft Hypervisor injects an access violation exception to the current virtual processor (VP), which is managed by the guest OS in different ways but typically through a bug check if no exception handler elects to handle the exception.

Static KDP implementation

The SLAT protection is the main principle that allows KDP to exist. In Windows, dynamic and static KDP implementations are similar and are managed by the secure kernel. The secure kernel is the only entity that is able to emit the ModifyVtlProtectionMask hypercall to the hypervisor with the goal of modifying the SLAT access protection for a physical page mapped in the lower VTL0.

For static KDP, the NT kernel verifies that the driver is not a session driver or mapped with large pages. If one of these conditions exists, or if the section is a discardable section, static KDP can’t be applied. If the entity that called the MmProtectDriverSection API did not request the target image to be unloadable, the NT kernel performs the first call into the secure kernel, which pins the normal address range (NAR) associated with the driver. The “pinning” operation prevents the address space of the driver from being reused, making the driver not unloadable. The NT kernel then brings all the pages that belong to the section in memory and makes them private (i.e., not addressed by the prototype PTEs). The pages are then marked as read-only in the leaf PTE structures (highlighted as “gPTE” in figure 2). At this stage, the NT kernel can finally call the secure kernel for protecting the underlying physical pages through the SLAT. The secure kernel applies the protection in two phases:

- Register all the physical pages that belong to the section and mark them as “owned by VTL0” by adding the proper NTEs (normal table addresses) in the database and updating the underlying secure PFNs, which belong to VTL1. This allows the secure kernel to track the physical pages, which still belong to the NT kernel.

- Apply the read-only protection to the VTL0 SLAT table. The hypervisor uses one SLAT table and VMCB per VTL.

The target image’s section is now protected. No entity in VTL0 will be able to write to any of the pages belonging to the section. As highlighted, the secure kernel in this scenario has protected some memory pages that were initially allocated by the NT kernel in VTL0.

Dynamic KDP implementation

Dynamic KDP uses services provided by the new segment heap for allocating memory from a secure pool, which is almost entirely managed by the secure kernel.

In early phases of its boot process, the NT memory manager calculates the randomized virtual base address of a 512GB region used for the secure pool, which spans exactly one of the 256 kernel PML4 entries. Later in phase 1, the NT memory manager emits a secure call, internally named INITIALIZE_SECURE_POOL, which includes the calculated memory region and allows the secure kernel to initialize the secure pool.

The secure kernel creates a NAR representing the entire 512GB virtual region belonging to the unsecure NT kernel, and initializes all the relative NTEs belonging to the NAR. The secure pool virtual address space in the secure kernel is 256GB wide, which means that the PML4 mapping it is shared with some other content and is not at the same base address compared to the NT one. So, while initializing the secure pool descriptor, the secure kernel also calculates a delta value, which is the difference between the secure pool base address in the secure kernel and the one reserved in the NT kernel (as shown in figure 4). This is important, because it allows the secure kernel to specify to the NT kernel where to map a physical page belonging to the secure pool.

Figure 4. The Secure Pool VTL1 to VTL 0 DELTA value.

When software running in VTL0 kernel requests some memory to be allocated from the secure pool, a secure call is made to the secure kernel, which invokes the internal RtlpHpAllocateHeap heap function, which is exposed in both VTLs. If the segment heap calculates that there are no more free memory segments left in the secure pool, it calls the SkmmAllocatePoolMemory routine, which allocates new memory pages for the pool. The heap always tries to avoid committing new memory pages if it doesn’t really need to.

Like the NtAllocateVirtualMemory API, which is exposed by the NT kernel, the SkmmAllocatePoolMemory API supports two kinds of operations: reserve and commit. A reserve operation allows the secure kernel’s memory manager to reserve some PTEs needed for the pool allocation. A commit operation actually allocates free physical pages.

Physical pages are allocated from a bundle of free pages that belong to the secure kernel, whose secure PFNs are in the secure state, and mapped in the VTL 1’s page table, which means that all the VTL 1 paging table hierarchy are allocated. Like static KDP, the secure kernel sends the “ModifyVtlProtectionMask” hypercall to the hypervisor, with the goal of mapping the physical pages as read-only in the VTL0 SLAT table. After the pages become accessible to VTL0, the secure kernel copies the data specified by the caller and calls back NT.

The NT kernel uses services provided by the memory manager to map the guest physical pages in VTL0. Remember that the entire root partition physical address space of both VTL0 and VTL1 is mapped with the identity mapping, meaning that a guest physical page number valid in VTL0 is also valid in VTL1. The secure kernel asks the NT memory manager to map a page belonging to the secure pool by knowing exactly which virtual address the page should be mapped to. This is thanks to the delta value calculated previously in phase 1 (figure 4).

The allocation is returned to the caller in VTL0. The underlaying pages, as with static KDP, are no more writable from any entity in VTL0.

Astute readers will note that the above description of KDP deals only with establishing SLAT protections for the guest physical address(es) backing a given protected memory region. KDP does not enforce how the virtual address range mapping a protected region is translated. Today, the secure kernel verifies only on a periodic basis that protected memory regions translate to the appropriate, SLAT-protected GPA. The design of KDP permits the possibility of future extensions to assert more direct control over the address translation hierarchy of protected memory regions.

Applications of KDP on inbox components

To demonstrate how KDP can provide value two inbox components, we’re highlighting how it’s implemented in CI.dll, the code integrity engine in Windows, and the Windows Defender System Guard runtime attestation engine.

First, CI.dll. The goal of using KDP is to protect internal policy state after it has been initialized (i.e., read from the registry or generated at boot time). These data structures are critical to protect as if they are tampered with—a driver that is properly signed but vulnerable could attack the policy data structures and then install an unsigned driver on the system. With KDP, this attack is mitigated by ensuring the policy data structures cannot be tampered with.

Second, Windows Defender System Guard. To provide runtime attestation, the attestation broker is only allowed to connect to the attestation driver one time. This is because the state is stored in VTL1 memory. The driver stores the connection state in its memory and this needs to be protected to prevent an attack from trying to reset the connection with a potentially tampered with broker agent. KDP can lock these variables and ensure that only a single connection between the broker and driver can be established.

Code integrity and Windows Defender System Guard are two of the critical features of Secured-core PCs. KDP enhances protection for these vital security systems and raise the bar that attackers need to overcome to compromise Secured-core PCs.

These are just a few examples of how useful protecting kernel and driver memory as read-only can be for the security and integrity of the system. As KDP is adopted more broadly, we expect to be able to expand the scope of protection as we look to protect against data corruption attacks more broadly.

Getting started with KDP

Both dynamic and static KDP do not have any further requirements other than the ones needed for running virtualization-based security. In ideal conditions, VBS can be started on any computer that supports:

- Intel, AMD or ARM virtualization extensions

- Second-level address translation: NPT for AMD, EPT for Intel, Stage 2 address translation for ARM

- Optionally, hardware MBEC, which reduces the performance cost associated with HVCI

More info on the requirements for VBS can be found here. On Secured-core PCs, virtualization-based security is supported and hardware-backed security features are enabled by default. Customers can find Secured-core PCs from a variety of partner vendors that feature the comprehensive Secured-core security features that are now enhanced by KDP.

Andrea Allievi

Security Kernel Core Team

READ MORE HERE