Cyberattacks against machine learning systems are more common than you think

Machine learning (ML) is making incredible transformations in critical areas such as finance, healthcare, and defense, impacting nearly every aspect of our lives. Many businesses, eager to capitalize on advancements in ML, have not scrutinized the security of their ML systems. Today, along with MITRE, and contributions from 11 organizations including IBM, NVIDIA, Bosch, Microsoft is releasing the Adversarial ML Threat Matrix, an industry-focused open framework, to empower security analysts to detect, respond to, and remediate threats against ML systems.

During the last four years, Microsoft has seen a notable increase in attacks on commercial ML systems. Market reports are also bringing attention to this problem: Gartner’s Top 10 Strategic Technology Trends for 2020, published in October 2019, predicts that “Through 2022, 30% of all AI cyberattacks will leverage training-data poisoning, AI model theft, or adversarial samples to attack AI-powered systems.” Despite these compelling reasons to secure ML systems, Microsoft’s survey spanning 28 businesses found that most industry practitioners have yet to come to terms with adversarial machine learning. Twenty-five out of the 28 businesses indicated that they don’t have the right tools in place to secure their ML systems. What’s more, they are explicitly looking for guidance. We found that preparation is not just limited to smaller organizations. We spoke to Fortune 500 companies, governments, non-profits, and small and mid-sized organizations.

Our survey pointed to marked cognitive dissonance especially among security analysts who generally believe that risk to ML systems is a futuristic concern. This is a problem because cyber attacks on ML systems are now on the uptick. For instance, in 2020 we saw the first CVE for an ML component in a commercial system and SEI/CERT issued the first vuln note bringing to attention how many of the current ML systems can be subjected to arbitrary misclassification attacks assaulting the confidentiality, integrity, and availability of ML systems. The academic community has been sounding the alarm since 2004, and have routinely shown that ML systems, if not mindfully secured, can be compromised.

Introducing the Adversarial ML Threat Matrix

Microsoft worked with MITRE to create the Adversarial ML Threat Matrix, because we believe the first step in empowering security teams to defend against attacks on ML systems, is to have a framework that systematically organizes the techniques employed by malicious adversaries in subverting ML systems. We hope that the security community can use the tabulated tactics and techniques to bolster their monitoring strategies around their organization’s mission critical ML systems.

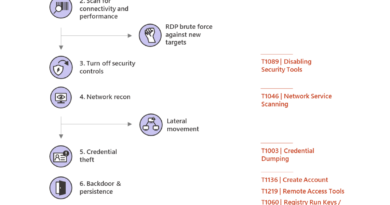

- Primary audience is security analysts: We think that securing ML systems is an infosec problem. The goal of the Adversarial ML Threat Matrix is to position attacks on ML systems in a framework that security analysts can orient themselves in these new and upcoming threats. The matrix is structured like the ATT&CK framework, owing to its wide adoption among the security analyst community – this way, security analysts do not have to learn a new or different framework to learn about threats to ML systems. The Adversarial ML Threat Matrix is also markedly different because the attacks on ML systems are inherently different from traditional attacks on corporate networks.

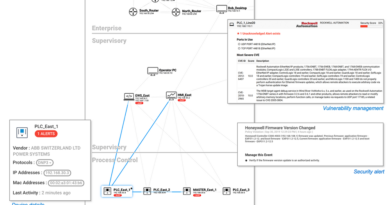

- Grounded in real attacks on ML Systems: We are seeding this framework with a curated set of vulnerabilities and adversary behaviors that Microsoft and MITRE have vetted to be effective against production ML systems. This way, security analysts can focus on realistic threats to ML systems. We also incorporated learnings from Microsoft’s vast experience in this space into the framework: for instance, we found that model stealing is not the end goal of the attacker but in fact leads to more insidious model evasion. We also found that when attacking an ML system, attackers use a combination of “traditional techniques” like phishing and lateral movement alongside adversarial ML techniques.

Open to the community

We recognize that adversarial ML is a significant area of research in academia, so we also garnered input from researchers at the University of Toronto, Cardiff University, and the Software Engineering Institute at Carnegie Mellon University. The Adversarial ML Threat Matrix is a first attempt at collecting known adversary techniques against ML Systems and we invite feedback and contributions. As the threat landscape evolves, this framework will be modified with input from the security and machine learning community.

“When it comes to Machine Learning security, the barriers between public and private endeavors and responsibilities are blurring; public sector challenges like national security will require the cooperation of private actors as much as public investments. So, in order to help address these challenges, we at MITRE are committed to working with organizations like Microsoft and the broader community to identify critical vulnerabilities across the machine learning supply chain.

This framework is a first step in helping to bring communities together to enable organizations to think about the emerging challenges in securing machine learning systems more holistically.”

– Mikel Rodriguez, Director of Machine Learning Research, MITRE

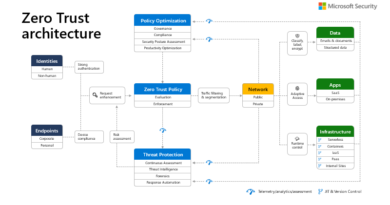

This initiative is part of Microsoft’s commitment to develop and deploy ML systems securely. The AI, Ethics, and Effects in Engineering and Research (Aether) Committee provides guidance to engineers to develop safe, secure, and reliable ML systems and uphold customer trust. To comprehensively protect and monitor ML systems against active attacks, the Azure Trustworthy Machine Learning team routinely assesses the security posture of critical ML systems and works with product teams and front-line defenders from the Microsoft Security Response Center (MSRC) team. The lessons from these activities are routinely shared with the community for various people:

- For engineers and policymakers, in collaboration with Berkman Klein Center at Harvard University, we released a taxonomy documenting various ML failure modes.

- For developers, we released threat modeling guidance specifically for ML systems.

- For security incident responders, we released our own bug bar to systematically triage attacks on ML systems

- For academic researchers, Microsoft opened a $300K Security AI RFP, and as a result, partnering with multiple universities to push the boundary in this space.

- For industry practitioners and security professionals to develop muscle in defending and attacking ML systems, Microsoft hosted a realistic machine learning evasion competition.

This effort is aimed at security analysts and the broader security community: the matrix and the case studies are meant to help in strategizing protection and detection; the framework seeds attacks on ML systems, so that they can carefully carry out similar exercises in their organizations and validate the monitoring strategies.

To learn more about this effort, visit the Adversarial ML Threat Matrix GitHub repository and read about the topic from MITRE’s announcement, and SEI/CERT blog.

READ MORE HERE