Attack AI systems in Machine Learning Evasion Competition

Today, we are launching MLSEC.IO, an educational Machine Learning Security Evasion Competition (MLSEC) for the AI and security communities to exercise their muscle to attack critical AI systems in a realistic setting. Hosted and sponsored by Microsoft, alongside NVIDIA, CUJO AI, VM-Ray, and MRG Effitas, the competition rewards participants who efficiently evade AI-based malware detectors and AI-based phishing detectors.

Machine learning powers critical applications in virtually every industry: finance, healthcare, infrastructure, and cybersecurity. Microsoft is seeing an uptick of attacks on commercial AI systems that could compromise the confidentiality, integrity, and availability guarantees of these systems. Publicly known cases documented by MITRE’s ATLAS framework, show how with the proliferation of AI systems comes the increased risk that the machine learning powering these systems can be manipulated to achieve an adversary’s goals. While the risks are inherent in all deployed machine learning models, the threat is especially explicit in cybersecurity, where machine learning models are increasingly relied on to detect threat actors’ tools and behaviors. Market surveys have consistently indicated that the security and privacy of AI systems are top concerns for executives. According to CCS Insight’s survey of 700 senior IT leaders in 2020, security is now the biggest hurdle companies face with AI, cited by over 30 percent of respondents1.

However, security practitioners are unaware of how to clear this new hurdle. A recent Microsoft survey found that 25 out of 28 organizations did not have the right tools in place to secure their AI systems. While academic researchers have been studying how to attack AI systems for close to two decades, awareness among practitioners is low. That is why one recommendation for business leaders from the 2021 Gartner report Top 5 Priorities for Managing AI Risk Within Gartner’s MOST Framework published2 is that organizations “Drive staff awareness across the organization by leading a formal AI risk education campaign.”

It is critical to democratize the knowledge to secure AI systems. That is why Microsoft recently released Counterfit, a tool born out of our own need to assess Microsoft’s AI systems for vulnerabilities with the goal of proactively securing AI services. For those new to adversarial machine learning, NVIDIA released MINTNV, a hack-the-box style environment to explore and build their skills.

Participate in MLSEC.IO

With the launch today of MLSEC.IO, we aim to highlight how security models can be evaded by motivated attackers and allow practitioners to exercise their muscles attacking critical machine learning systems used in cybersecurity.

“There is a lack of practical knowledge about securing or attacking AI systems in the security community. Competitions like Microsoft’s MSLEC democratizes adversarial machine learning knowledge for the offensive and defensive security communities, as well as the machine learning community. MLSEC’s hands-on approach is an exciting entry point into AML.”—Christopher Cottrell, AI Red Team Lead, NVIDIA

The competition involves two challenges beginning on August 6 and ending on September 17, 2021: an Anti-Malware Evasion track and an Anti-Phishing Evasion track.

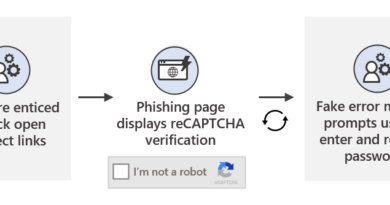

- Anti-Phishing Evasion Track: Machine learning is routinely used to detect a highly successful attacker technique for gaining initial via phishing. In this track, contestants play the role of an attacker and attempt to evade a suite of anti-phishing models. Custom built by CUJO AI, the phishing machine learning models are purpose-built for this competition only.

- Anti-Malware Evasion track: This challenge provides an alternative scenario for attackers wishing to bypass machine-learning-based antivirus: change an existing malicious binary in a way that disguises it from the antimalware model.

In addition, for each of the Attacker Challenge tracks, the highest-scoring submission that extends and leverages Counterfit—Microsoft’s open-source tool for investigating the security of machine learning models—will be awarded a bonus prize.

“The security evasion challenge creates new pathways into cybersecurity and opens up access for a broader base of talent. This year, to lower barriers to entry, we are introducing the phishing challenge, while still strongly encouraging people without significant experience in malware to participate.”—Zoltan Balazs, Head of Vulnerability Research Lab at CUJO AI and cofounder of the competition.

Key details about the competition

- The competition runs from August 6 to September 17, 2021. Registration will remain open throughout the duration of the competition.

- Winners will be announced on October 27, 2021, and contacted via email.

- Prizes for first place, honorable mentions, as well as a bonus prize will be awarded for each of the two tracks.

Learn More

To learn more about the 2021 Machine Learning Security Evasion Competition:

- Register now to begin participating on August 6, 2021, to exercise your offensive security muscle.

- Visit the Counterfit GitHub Repository to learn more about Counterfit.

- If you are new to adversarial machine learning, practice attacking AI systems via NVIDIA’s MINTNV hack-the-box style challenge.

This competition is part of broader efforts at Microsoft to empower engineers to securely develop and deploy AI systems. We recommend using it alongside the following resources:

- For security analysts to orient to threats against AI systems, Microsoft, in collaboration with MITRE, released an ATT&CK style AdvML Threat Matrix complete with case studies of attacks on production machine learning systems.

- For security incident responders, we released our own bug bar to systematically triage attacks on machine learning systems.

- For developers, we released threat modeling guidance specifically for machine learning systems.

- For engineers and policymakers, Microsoft, in collaboration with Berkman Klein Center at Harvard University, released a taxonomy documenting various machine learning failure modes.

Register now to participate in the Machine Learning Security Evasion Competition that begins on August 6 and ends on September 17, 2021. Winners will be announced on October 27, 2021.

To learn more about Microsoft Security solutions, visit our website. Bookmark the Security blog to keep up with our expert coverage on security matters. Also, follow us at @MSFTSecurity for the latest news and updates on cybersecurity.

1CCS Insight, Senior Leadership IT Investment Survey, Nick McQuire et. al, 18 August 2020.

2Gartner, Top 5 Priorities for Managing AI Risk Within Gartner’s MOST Framework, Avivah Litan, et al., 15 January 2021.

READ MORE HERE